NSX-V to NSX-T Migration using Layer 2 Bridging

This blog will explore how we can migrate workloads which are on hosts prepared for NSX-V to hosts prepared for NSX-T using NSX-T Layer 2 Bridging.

|

| Cluster Setup |

In the lab setup, four hosts ESXi 1 up to 4 are prepared for NSX-T and the remaining four hosts ESXi5 up to ESXi 8 are prepared for NSX-V

NSX-T edges used for Layer 2 bridging are on NSX-V prepared hosts.

|

| Logical Setup |

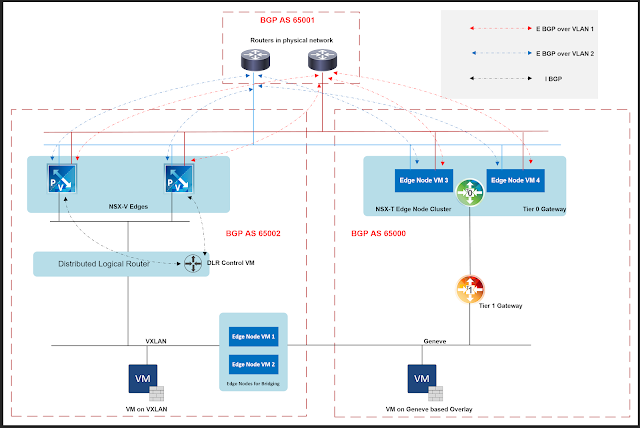

The above is the logical setup used in this lab.

|

| IP Addressing |

Above shows the IP addressing used in the lab.

|

| BGP AS Numbering |

The above picture shows the BGP AS numbering used.

|

| BGP peerings |

The above diagram shows the BGP peerings.

e-BGP peerings between NSX and the physical network.

i BGP between NSX-V edges and Distributed Logical Router.

There is no routing protocol between Tier 1 Gateway of NSX-T and Tier 0 Gateway upstream.

During migration, traffic flow will be through NSX-V edges which means that:

1. You can prefer not to advertise connected subnets on the Tier 1 Gateway

2. Or to keep BGP disabled on Tier 0 Gateway.

NSX-V Setup

|

| NSX-V Prepared Cluster |

|

| Workloads hosted on both clusters |

We need to make sure that security settings of the port group (corresponding to the VXLAN being bridged) are set accordingly.

- Set promiscuous mode on the portgroup.

- Allow forged transmit on the portgroup.

|

| Security settings of VXLAN backed port group |

NSX-T Setup

You must change the default MAC address of the NSX-T virtual distributed router so that it does not use the same MAC address that is used by the Distributed Logical Router (DLR) of NSX-V.

The virtual distributed routers (VDR) in all the transport nodes of an NSX-T environment use the default global MAC address. You can change the global MAC address of the NSX-T VDR by updating the global gateway configuration with the following PUT API:

PUT https://{policy-manager}/policy/api/v1/infra/global-config

|

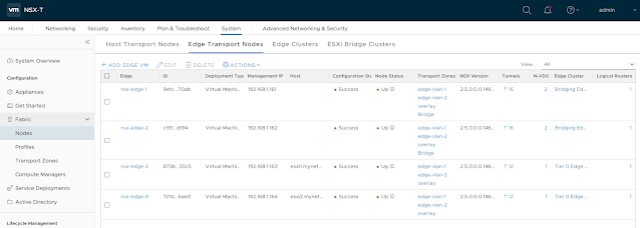

| Edge used for L2 Bridging |

|

| NSX-T Edge Clusters |

|

| Tier 0 Gateway |

|

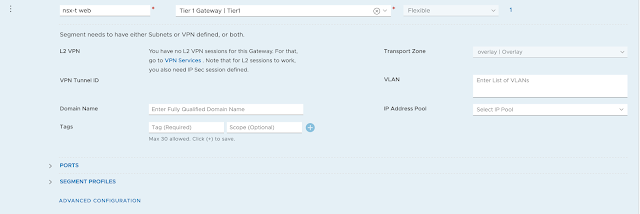

| Tier 1 Gateway |

|

| NSX-T Segment connected to Tier 1 Gateway |

|

| Gateway set on NSX-T Segment |

Validation of the setup

|

| VM on NSX-T Segment |

|

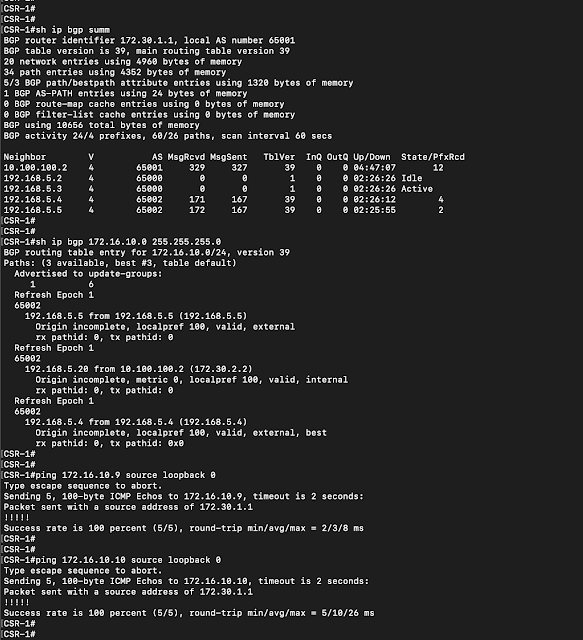

| Traffic flow from VM on NSX-T Segment to loopback interface of physical router |

Migration

Now we will migrate the VM which is on NSX-V prepared cluster to NSX-T prepared cluster.

With this, both the workloads will then be on NSX-T prepared cluster.

At this point of time, we need to ensure that workloads have Tier 1 Gateway as their gateway.

We will ensure BGP peerings between physical routers and NSX-T edges are now all up.

And disable the BGP peerings between physical routers and NSX-V edges

|

| Workloads migrated to NSX-T prepared cluster |

Above picture shows that workloads have moved to NSX-T prepared cluster.

|

| After migration, traffic flow from physical router to VMs on NSX-T segment |

From the physical router, we validate that BGP peerings with NSX-T edges are now up and those with NSX-V edges are down.

Traffic now starts flowing through NSX-T edges.

|

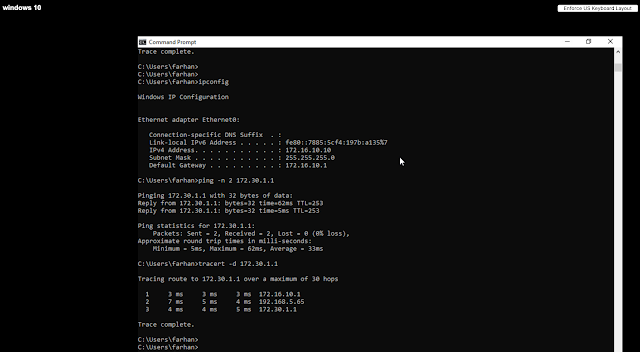

| VM on NSX-T Segment to loopback IP of physical router |

|

| VM on NSX-T segment to loopback IP of physical router |

|

| Traffic flow after migration |

Further reading:

NSX Techzone – Techzone covers lot of guidance on NSX-V to NSX-T migration.

This is a great blog! Is there anyone to accomplish this if your V infrastructure is using OSPF, and of course our new T will be BGP?

LikeLike

The real answer would rely on the RP in the Core. This is bridging so its all L2. The routing would still from the core have to see your NSX-V OSPF area as the preferred site of ingress/egress. Then, once you cut over the gateway to NSX-T the (U)DLR will stop advertising that subnet and it will start advertising out in BGP. Even if you have that site as the preferred route it is no longer being advertised from the first site so you would then be flowing through your BGP site. Again, this is the Routing Protocol at the core (or wherever the redistribution is taking place), that you would have to play with metrics to ensure symmetric data flow.

LikeLike